Abstract

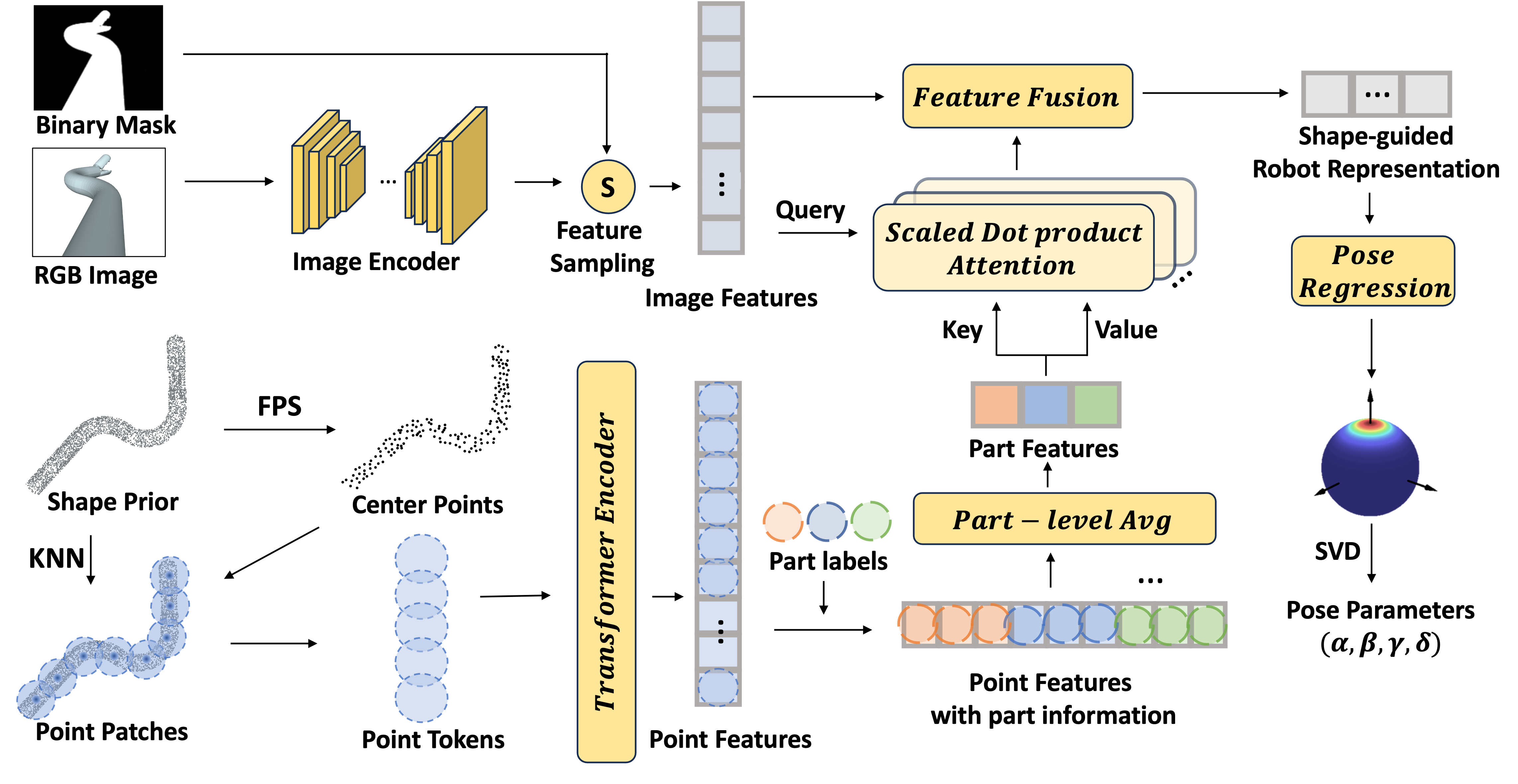

Accurate estimation of both the external orientation and internal bending angle is crucial for understanding a flexible robot state within its environment. However, existing sensor-based methods face limitations in cost, environmental constraints, and integration issues. Conventional image-based methods struggle with the shape complexity of flexible robots. In this paper, we propose a novel shape-guided configuration-aware learning framework for image-based flexible robot pose estimation. Inspired by the recent advances in 2D-3D joint representation learning, we leverage the 3D shape prior of the flexible robot to enhance its image-based shape representation. Concretely, we first extract the part-level geometry representation of the 3D shape prior, then adapt this representation to the image by querying the image features corresponding to different robot parts. Furthermore, we present an effective mechanism to dynamically deform the shape prior. It aims to mitigate the shape difference between the adopted shape prior and the flexible robot depicted in the image. This more expressive shape guidance further boosts the image-based robot representation and can be effectively used for flexible robot pose refinement. Extensive experiments on surgical flexible robots demonstrate the advantages of our method when compared with a series of keypoint-based, skeleton-based and direct regression-based methods.

Flexible Robot Pose Estimation

Pose Estimation with Configuration-aware Shape Guidance

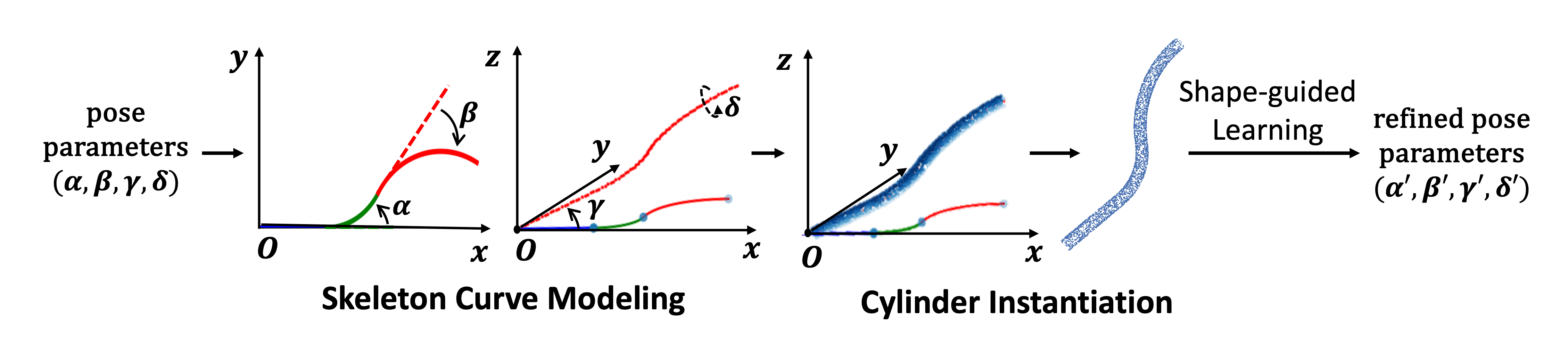

Pose Refinement with Configuration-aware Shape Deformation

Results

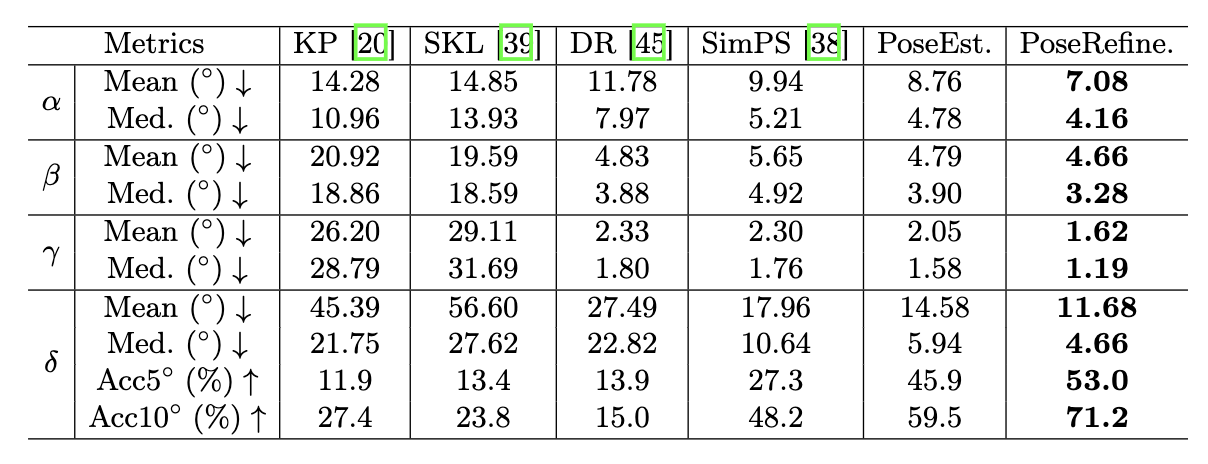

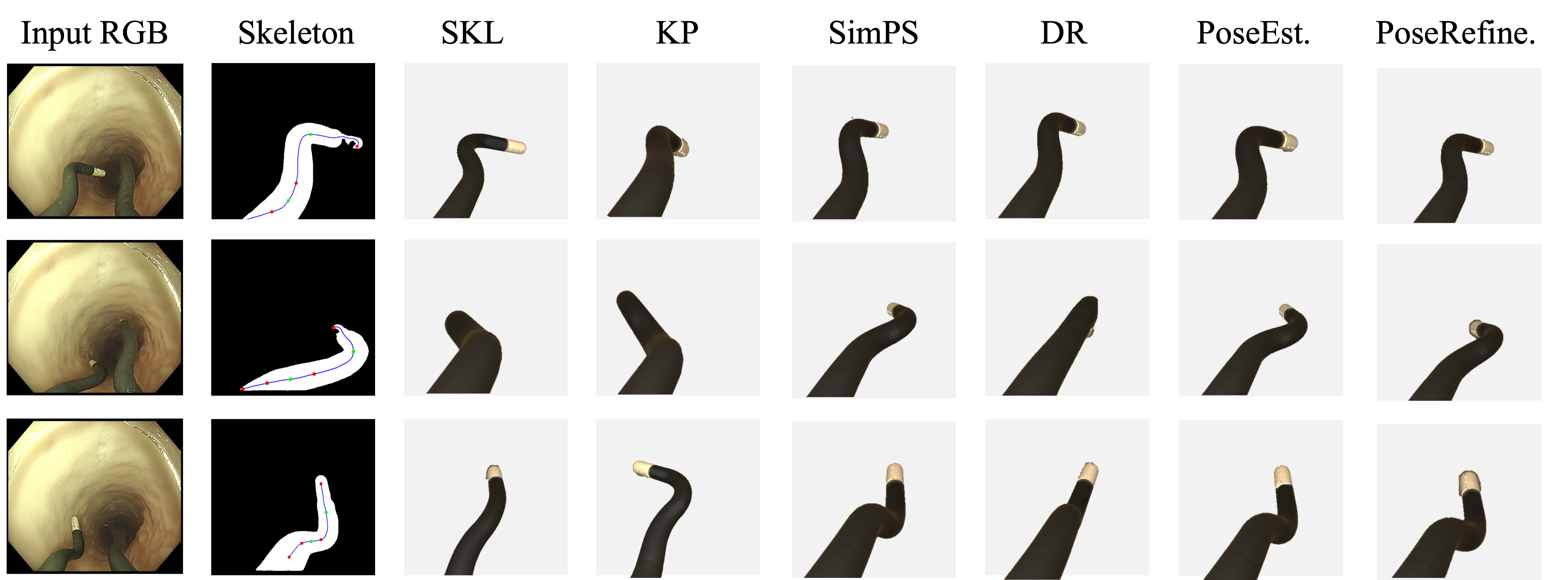

Does the proposed method surpass existing image-based approaches?

It can be found that conventional keypoint-based (KP) and skeleton-based (SKL) methods yield poor performance when attempting to localize keypoints and extract complete skeletons from high degrees of freedom (DoF) flexible robots. Although regression-based (DR, SimPS) methods outperform KP and SKL, they still fall short due to a lack of an effective mechanism to model the variation in flexible robot shapes. In contrast, our method PoseEst. leverages the informative shape guidance to enhance the flexible robot shape representation, and PoseRefine. further improves the representation by deforming the flexible robot shape with the initial pose parameters. These strategies significantly improve the accuracy of pose estimation.

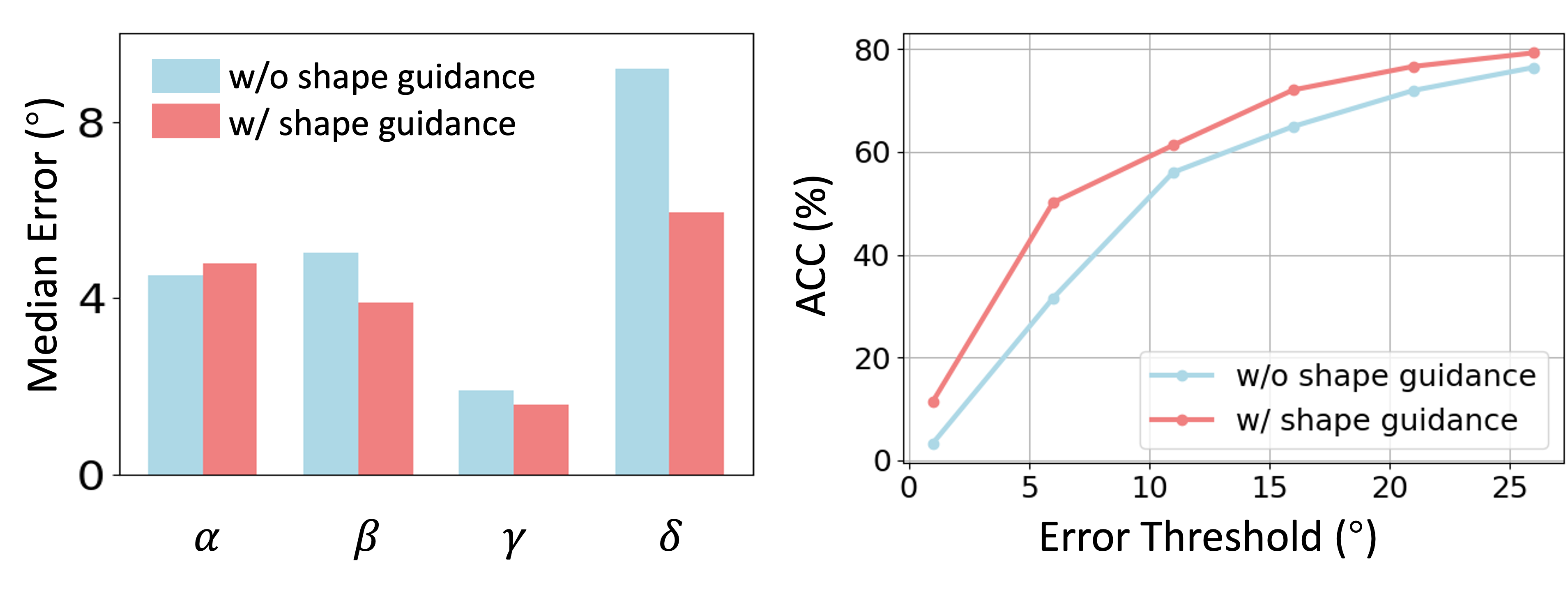

How effective is the shape guidance in enhancing the accuracy of pose estimation?

The leverage of the shape guidance can reduce the prediction error for most pose parameters. In addition, the model with shape guidance consistently achieves higher accuracy with respect to different error thresholds. Removing the robot configuration information from the shape guidance would consistently degrade the pose accuracy.

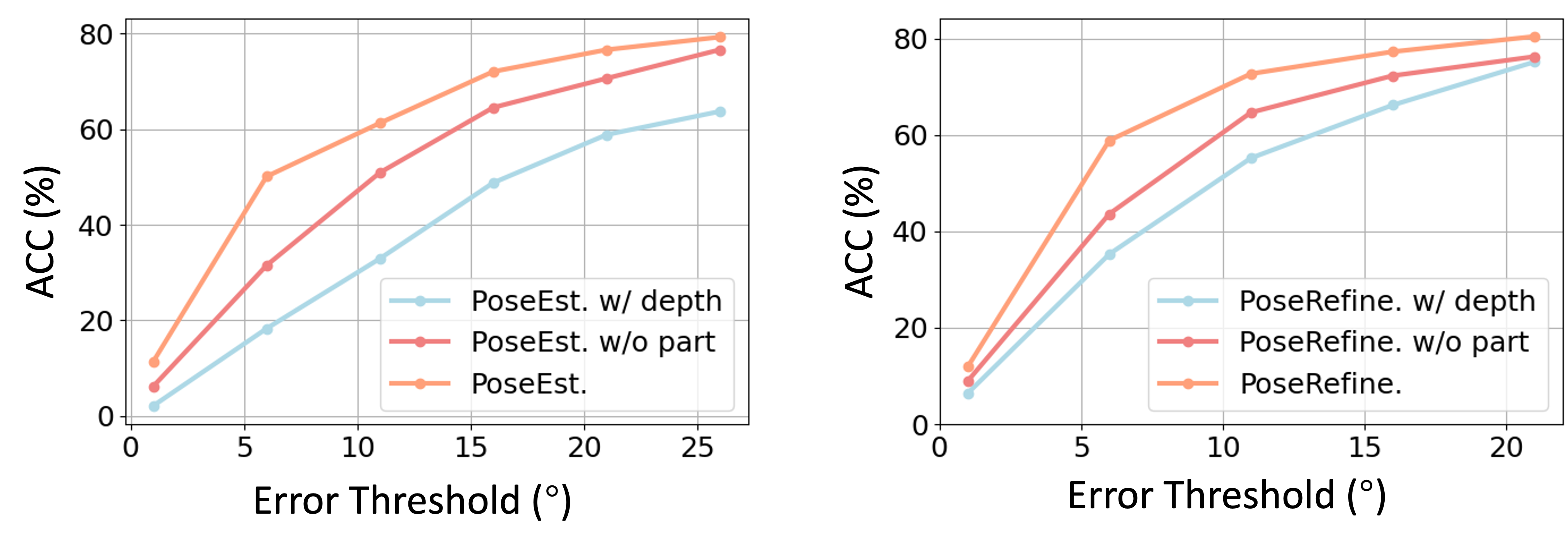

Does the shape prior guidance outperforms depth-based counterparts?

We recover the depth map from the image with a pre-trained depth prediction Transformer, lift the flexible robot to 3D, and extract the geometry feature from the robot point cloud for pose estimation. In both estimation and refinement stages, the depth-based counterpart is inferior to ours, primarily attributed to the severe shape distortions caused by depth noise.

Is the proposed methods can be applied to flexible robots with diverse configurations?

We made modifications on the robot arm by varying the arm thickness (Thick.), arm length (Len.), and the number of segments (Num.). It demonstrates that our methods can smoothly adapt to robots with diverse configurations and surpass the most competitive baseline in the main result.

Is the proposed method robust under various challenging surgical environments?

We conducted experiments under challenging visual conditions that typically present in surgery, including too bright or dark lighting conditions (Lighting), visual occlusions caused by flushing water and bubbles (Occlusion), and image blur caused by robot motion (Scope Rot.). With the help of 3D shape guidance, our method keeps commendable performance in these challenging scenarios.

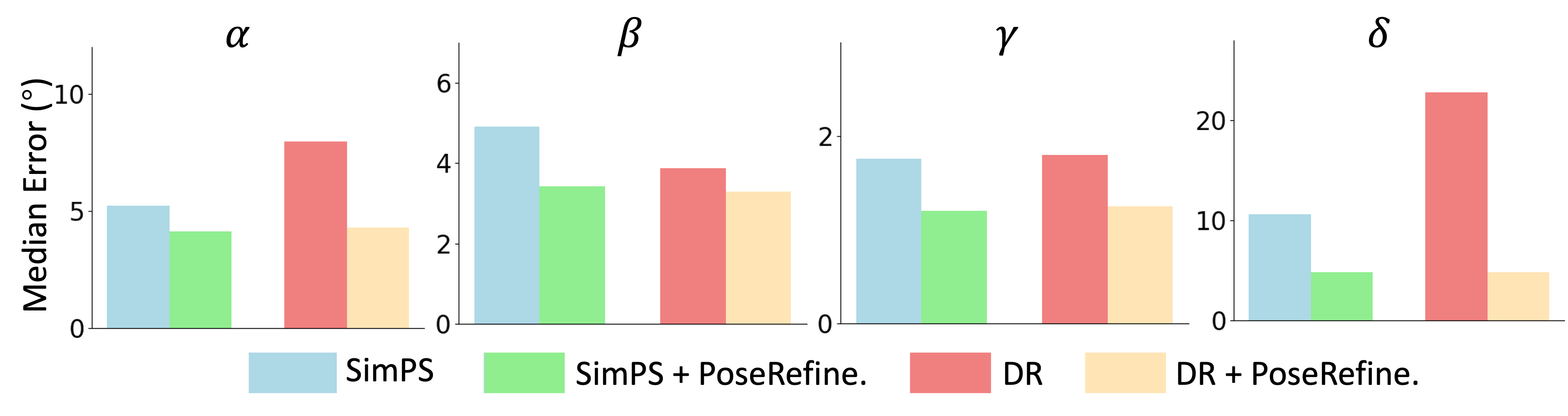

Could the pose refinement method be generally effective?

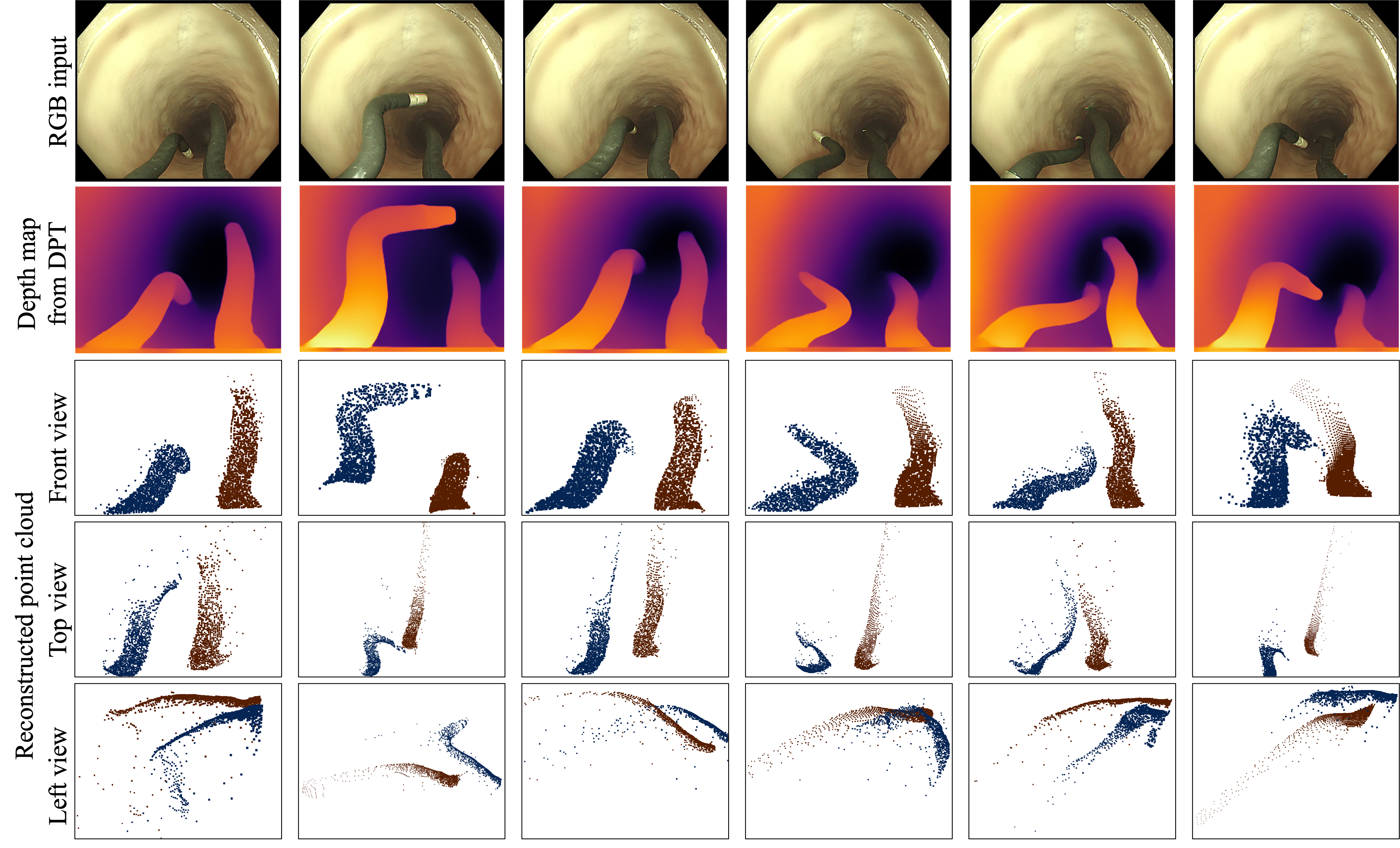

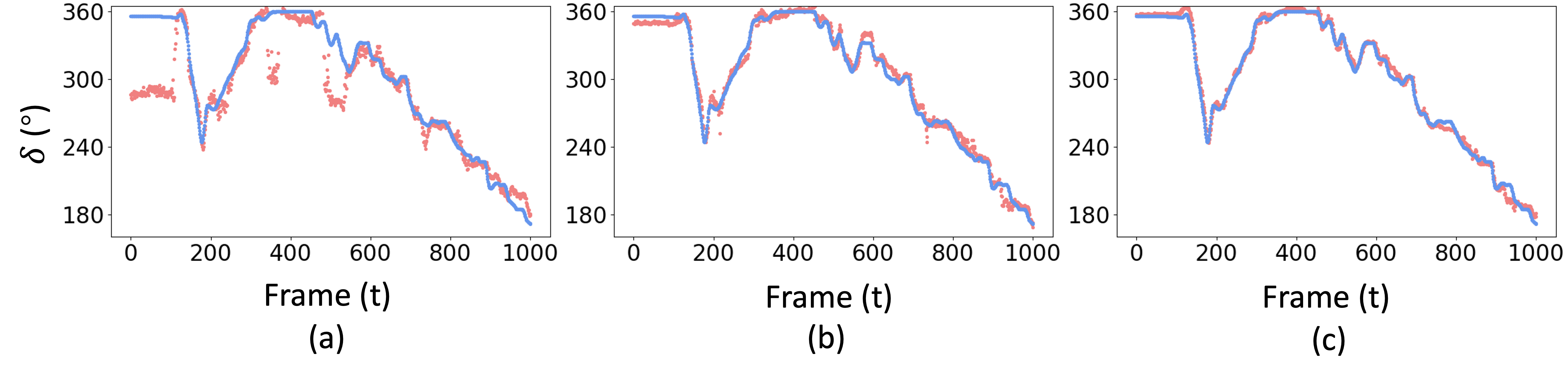

We evaluated the model in two different scenarios. First, we used it to refine the pose prediction from other baseline methods (Figure 8). Second, we take the pose prediction from the previous frame as the initial robot pose for the current frame, which is similar to robot pose tracking (Figure 9). The results indicate that the pose refinement model can significantly improve the average accuracy as well as the prediction robustness within the whole sequence.

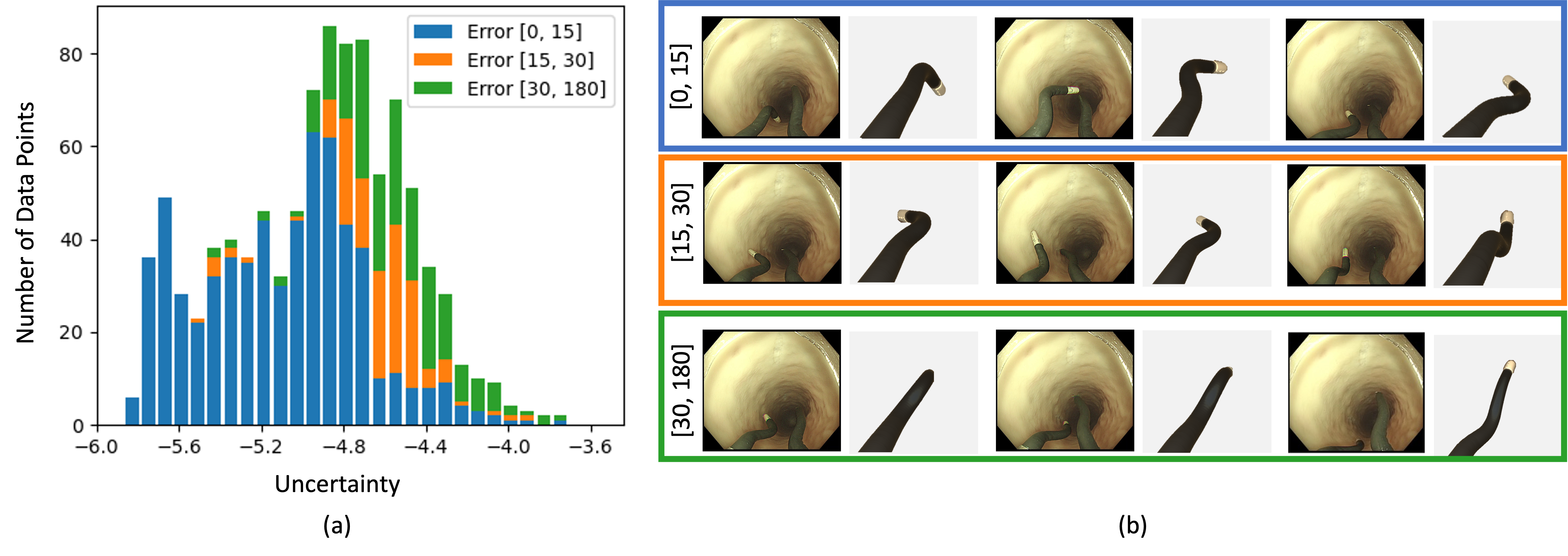

Could the uncertainty value reflect the pose estimation quality?

We adopt matrix Fisher distribution to construct a probabilistic model for representing rotation matrices and improving pose estimation. It can provide both the pose estimation and the reliance of the prediction. The results suggest that data with greater uncertainty are more likely to have larger errors, verifying that the uncertainty can be an effective indicator to reflect the pose quality.

Citation

Welcome to check our paper for more details of the research work. If you find our paper and repo useful, please consider to cite:@inproceedings{ma2024shape,

title={Shape-guided configuration-aware learning for endoscopic-image-based pose estimation of flexible robotic instruments},

author={Ma, Yiyao and Chen, Kai and Tong, Hon-Sing and Wei, Ruofeng and Ng, Yui-Lun and Kwok, Ka-Wai and Dou, Qi},

booktitle={European Conference on Computer Vision},

pages={259--276},

year={2024},

organization={Springer}

}